I’ve long been a passionate advocate for VMware’s vRealize Log Insight. I remember trying it out just after the 1.0 release way back in 2013.

Along the way I’ve used it to build custom dashboards for all sorts of apps that didn’t have monitoring capability. I particularly appreciated it’s ability to quickly narrow down on an issue when troubleshooting, then setup an alert for future occurrences. Having spent over a decade in high tempo operational environments, tools like Log Insight were a huge help.

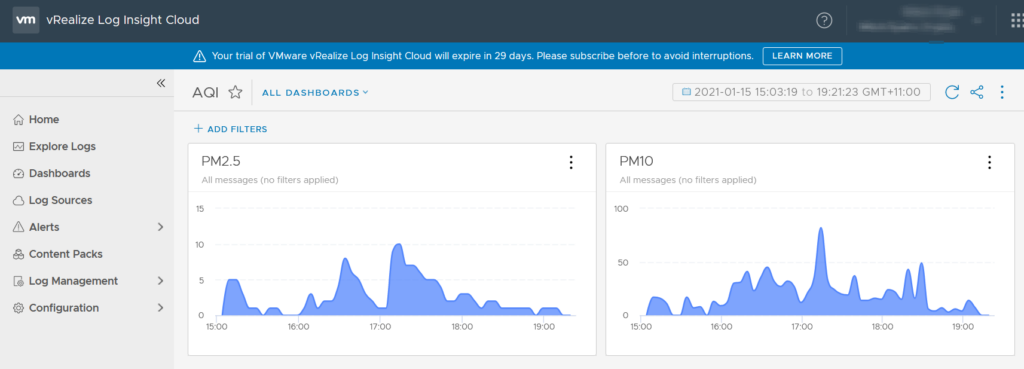

I recently bought a Raspberry Pi 4. Part of the reason I have Pi at home is to monitor the AQI, or Air Quality Index, in particular the PM2.5 and PM10 readings. I had this running for the last year or so on my Raspberry Pi 3, before the SD card finally failed and I decided to migrate over to a new setup.

So how am I going to get data into vRealize Log Insight Cloud? Well luckily the data source list is quite long. I took a screenshot of the sources tab in vRLIC to illustrate how many:

If you zoom right in, you’ll see that Fluentd and Fluentbit are both supported options, but there are many more possibilities as well.

- I’ve signed up to a 30 day free trial of Log Insight Cloud

- Once signed up, I ran the following on my Pi to install the fluentd package and vRLIC plugin

gem install fluentd

wget https://github.com/vmware/fluent-plugin-vmware-log-intelligence/releases/download/v2.0.5/fluent-plugin-vmware-log-intelligence-2.0.5.gem && \

gem install fluent-plugin-vmware-log-intelligence-2.0.5.gem

- I already have a Perl script that periodically reads data from my sds011 sensor, and writes to two different log files (for the PM2.5 and PM10 values). So I just need my fluentd config to tail these two log files. Here is my fluentd.conf file

<source>

@type tail

path /home/mitch/scripts/logs/pm*.log

pos_file PATH /home/mitch/scripts/log/pm.log.pos

tag aqi

read_from_head true

format none

</source>

<match aqi>

@type vmware_log_intelligence

endpoint_url https://data.mgmt.cloud.vmware.com/le-mans/v1/streams/ingestion-pipeline-stream

verify_ssl true

<headers>

Content-Type application/json

Authorization Bearer vRLI-API-Key-Here

structure simple

</headers>

<buffer>

@type file

path /home/mitch/fluentd/lint.buf

chunk_limit_records 300

flush_interval 3s

retry_max_times 3

</buffer>

<format>

@type json

tag_key text

</format>

</match>

- You’ll need a vRLIC API key to go after “Authorization Bearer”, you can get one here

- After I setup all of this, I simply ran

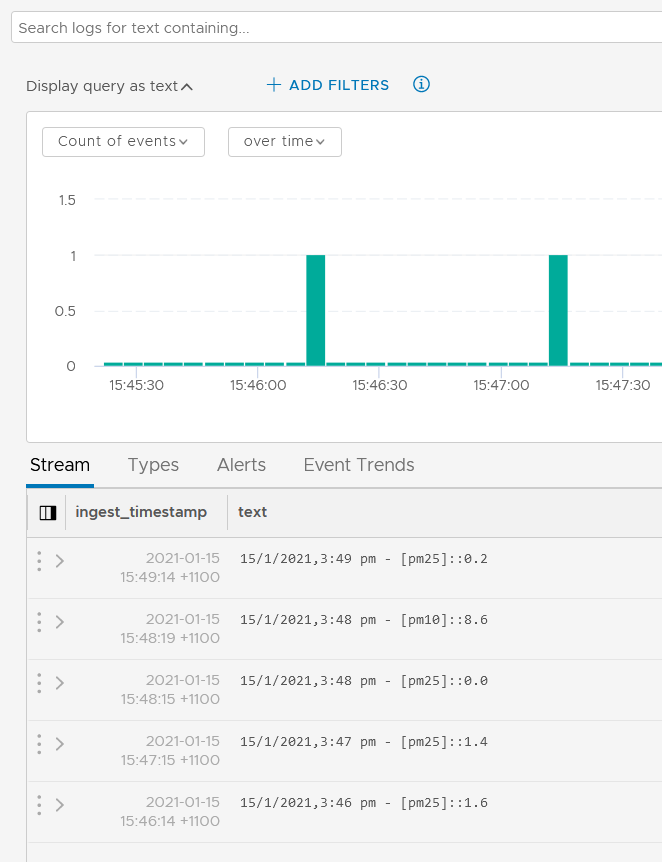

fluentd -c /path/to/fluentd.conf - After waiting a few minutes for my cron job to run the sds011 scripts, I checked to see that I had data flowing to vRLIC. Success!

My next post will be showing how to utilise this data in a vRLIC dashboard.